Introduction

In a world where technology advances at breakneck speed, there’s one field that continues to captivate minds and reshape industries: Artificial Intelligence (AI). Imagine a realm where machines learn, reason, and make decisions akin to humans—a realm where the boundaries between science fiction and reality blur with each passing breakthrough.

Welcome to the fascinating world of AI, where innovation knows no bounds and possibilities are limited only by imagination. In this blog, we embark on a journey to unravel the intricate layers of AI Computer Architecture, a realm often shrouded in mystery and complexity.

But why should you care about AI computer architecture? Well, picture this: Every time you ask a voice assistant for the weather forecast, perform a face unlock on your smartphone, or receive personalized recommendations on your favorite streaming platform, you’re experiencing the power of AI at work. Behind the scenes, sophisticated hardware orchestrates these marvels, laying the foundation for the AI revolution sweeping across industries.

So, whether you’re a seasoned tech enthusiast, an aspiring computer scientist, or simply curious about the inner workings of the technology shaping our future, join us as we demystify AI Computer Architecture. Together, let’s uncover the foundations of this transformative field and explore the possibilities that lie ahead. Brace yourself for a journey of discovery, innovation, and endless fascination. The future of AI awaits, and it’s time to dive in.

What is AI Computer Architecture?

At the heart of every AI system lies its architectural framework—an intricate web of components meticulously designed to process, analyze, and interpret vast amounts of data with unprecedented speed and accuracy. But what exactly is AI computer architecture, and why is it crucial in the realm of artificial intelligence?

In essence, AI computer architecture refers to the specialized hardware and software infrastructure tailored to support AI algorithms and applications. Unlike traditional computing systems, which rely primarily on central processing units (CPUs) for executing instructions, AI architectures leverage a diverse array of hardware accelerators, specialized processors, and optimized algorithms to tackle complex computational tasks inherent to AI.

Key Components and Principles:

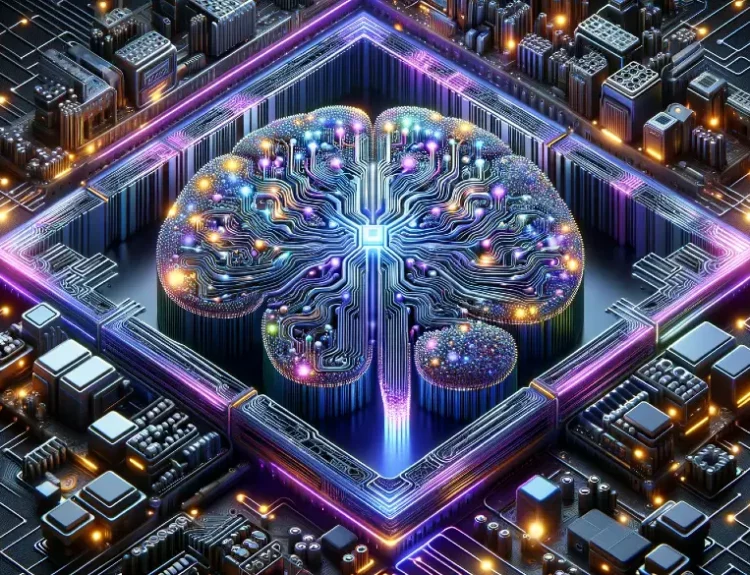

- Neural Network Hardware: At the core of many AI systems lies the neural network—an interconnected network of artificial neurons designed to mimic the workings of the human brain. Neural network hardware encompasses specialized processors optimized for parallel processing tasks, enabling efficient execution of neural network computations.

- GPU Architecture for AI: Graphics Processing Units (GPUs), initially developed for rendering graphics in video games, have emerged as a powerhouse in the realm of AI. Thanks to their parallel processing capabilities, GPUs excel at accelerating matrix and vector operations inherent to neural network training and inference tasks.

- CPU vs. GPU for AI: While CPUs remain indispensable for general-purpose computing tasks, GPUs offer unparalleled performance and efficiency when it comes to AI workloads. The debate over CPU vs. GPU for AI often centers around their respective strengths and trade-offs in handling specific computational tasks.

- FPGA in AI Systems: Field-Programmable Gate Arrays (FPGAs) represent another breed of hardware accelerators gaining traction in AI applications. Unlike fixed-function processors such as CPUs and GPUs, FPGAs offer reconfigurable logic gates, allowing for customized hardware implementations tailored to specific AI algorithms and tasks.

Understanding the intricate interplay between these components is crucial for optimizing AI performance, reducing computational overhead, and unlocking the full potential of artificial intelligence. In the following sections, we delve deeper into the evolution of artificial intelligence hardware and explore the fascinating world of neural network architecture.

Evolution of AI Computer Architecture Hardware

The evolution of artificial intelligence hardware is a testament to human ingenuity and relentless pursuit of technological advancement. From humble beginnings to the forefront of innovation, AI hardware has undergone a remarkable transformation, shaping the landscape of modern computing and revolutionizing the way we interact with technology.

Historical Overview:

The roots of AI hardware can be traced back to the early days of computing, where pioneering researchers laid the groundwork for artificial intelligence with rudimentary machines and conceptual frameworks. However, it wasn’t until the latter half of the 20th century that significant strides were made in AI hardware development.

Major Milestones:

- Early Computing Era: In the 1950s and 1960s, early AI pioneers such as Alan Turing and John McCarthy laid the foundation for artificial intelligence with the development of programmable computers and symbolic reasoning systems. These nascent technologies, while primitive by today’s standards, paved the way for future innovations in AI hardware.

- Emergence of Neural Networks: The advent of neural network theory in the 1980s sparked renewed interest in AI research and led to the development of specialized hardware architectures tailored to support neural network computations. Early examples include the Connection Machine and the Parallel Distributed Processing (PDP) models, which introduced parallel processing concepts to AI hardware design.

- Parallel Processing Revolution: The late 20th century witnessed a paradigm shift in AI hardware with the rise of parallel processing architectures, notably exemplified by Graphics Processing Units (GPUs). Originally designed for rendering graphics in video games, GPUs emerged as a potent tool for accelerating AI computations, thanks to their high parallelism and floating-point performance.

Impact of Technological Advancements:

Advancements in semiconductor technology, coupled with breakthroughs in microarchitecture design, have fueled the exponential growth of AI hardware capabilities. Moore’s Law, which predicts a doubling of transistor density roughly every two years, has enabled the development of increasingly powerful and energy-efficient processors tailored for AI workloads.

Furthermore, the convergence of AI and other emerging technologies such as quantum computing, neuromorphic engineering, and photonic computing promises to push the boundaries of artificial intelligence hardware even further, unlocking new realms of computational prowess and cognitive capabilities.

As we navigate the intricate tapestry of AI hardware evolution, it becomes evident that the journey is far from over. With each innovation and breakthrough, we inch closer to realizing the full potential of artificial intelligence and ushering in a new era of human-machine collaboration.

Understanding Neural Network Hardware

Neural network hardware lies at the core of many AI systems, serving as the backbone for processing and analyzing vast amounts of data with remarkable efficiency. At its essence, neural network hardware comprises specialized processors and accelerators optimized for executing the complex computations inherent to artificial neural networks.

Explanation of Neural Network Architecture:

Neural networks, inspired by the intricate workings of the human brain, consist of interconnected layers of artificial neurons that process input data, learn from patterns, and generate output predictions. Each neuron applies a series of mathematical operations to incoming data, transforming it into meaningful representations that drive decision-making.

Role of Hardware in Supporting Neural Networks:

Hardware plays a pivotal role in facilitating the execution of neural network operations, providing the computational resources necessary to train and deploy AI models effectively. Specialized processors, such as Tensor Processing Units (TPUs) and dedicated AI accelerators, are designed to handle the matrix and vector computations prevalent in neural network training and inference tasks.

Examples of Neural Network Hardware Solutions:

- Tensor Processing Units (TPUs): Developed by Google, TPUs are custom-built ASICs (Application-Specific Integrated Circuits) optimized for accelerating machine learning workloads, particularly those involving deep neural networks. TPUs excel at handling matrix multiplications and other tensor operations, delivering unparalleled performance and energy efficiency.

- Neuromorphic Processors: Inspired by the brain’s neural architecture, neuromorphic processors mimic biological neurons and synapses to perform AI computations in a more energy-efficient and brain-like manner. These specialized hardware solutions hold promise for advancing the field of AI by enabling low-power, real-time learning and inference tasks.

- Spiking Neural Networks: Spiking neural networks (SNNs) represent another class of neural network models that leverage asynchronous, event-driven computation. Hardware implementations of SNNs, such as IBM’s TrueNorth chip, offer energy-efficient solutions for processing spatiotemporal data and emulating the brain’s neural dynamics.

Understanding the intricate interplay between neural network algorithms and hardware architectures is essential for designing efficient and scalable AI systems.

GPU Architecture for AI

In the quest for accelerating AI computations, Graphics Processing Units (GPUs) have emerged as a game-changer, offering unparalleled parallel processing capabilities and computational throughput. Originally designed for rendering graphics in video games, GPUs have found new life as versatile accelerators for a wide range of AI workloads, from training deep neural networks to running real-time inference tasks.

Importance of GPUs in Accelerating AI Computations:

Unlike traditional CPUs, which excel at sequential processing tasks, GPUs are optimized for parallel processing, making them ideal for handling the matrix and vector operations prevalent in AI algorithms. By leveraging thousands of cores operating in parallel, GPUs can perform computations at lightning speed, dramatically reducing the time required for training and inference tasks.

Comparison of GPU Architectures for AI:

The landscape of GPU architectures for AI is vast and diverse, with major players such as NVIDIA, AMD, and Intel competing to deliver the most powerful and efficient solutions. NVIDIA’s CUDA architecture, in particular, has become synonymous with AI acceleration, thanks to its robust ecosystem of software libraries, frameworks, and development tools tailored for machine learning tasks.

Case Studies Highlighting GPU-Based AI Applications:

From image recognition and natural language processing to autonomous driving and drug discovery, GPU-accelerated AI has permeated virtually every industry, driving innovation and unlocking new possibilities. Leading companies and research institutions rely on GPU clusters and supercomputers to tackle some of the most challenging computational problems, pushing the boundaries of AI capabilities.

As we delve deeper into the realm of GPU architecture for AI, it becomes evident that these versatile accelerators are more than just graphics processors—they are catalysts for innovation, powering the next wave of AI-driven breakthroughs.

CPU vs. GPU for AI: Choosing the Right Hardware

When it comes to AI workloads, the choice between CPUs and GPUs hinges on a delicate balance of factors, including computational efficiency, versatility, and cost-effectiveness. CPUs, with their general-purpose architecture and wide range of instruction sets, excel at handling diverse tasks but may struggle to keep pace with the parallel processing demands of AI algorithms. On the other hand, GPUs offer massive parallelism and high throughput, making them ideal for accelerating matrix operations and neural network computations.

However, GPUs may not be as flexible as CPUs when it comes to handling non-parallelizable tasks or executing sequential code. Ultimately, the decision boils down to the specific requirements of the AI application, budget constraints, and the need for scalability. In many cases, a hybrid approach that leverages both CPU and GPU resources offers the best of both worlds, striking a balance between performance and versatility.

The Intersection of Technology and AI Computer Architecture

As AI continues to permeate every facet of our digital landscape, the intersection of technology and AI computer architecture becomes increasingly profound. From edge computing and Internet of Things (IoT) devices to cloud-based AI platforms and supercomputers, advancements in hardware architecture are shaping the trajectory of artificial intelligence, driving innovation and fueling unprecedented growth.

Moreover, the symbiotic relationship between AI algorithms and hardware design underscores the importance of interdisciplinary collaboration, where computer scientists, electrical engineers, and domain experts converge to push the boundaries of what’s possible(Checkout our article on whether AI will replace computer scientists). Together, they explore new frontiers in neuromorphic computing, quantum AI, and beyond, laying the groundwork for a future where intelligent machines augment human capabilities and transform society in ways we’ve yet to imagine.

Conclusion

In the ever-evolving landscape of artificial intelligence, understanding the foundations of AI computer architecture is paramount. From the intricate interplay between neural network algorithms and specialized hardware to the transformative impact of GPUs on AI acceleration, we’ve embarked on a journey of discovery and innovation.

As we reflect on the evolution of artificial intelligence hardware and the boundless possibilities that lie ahead, one thing becomes abundantly clear: the future of AI is brighter and more promising than ever before. With each breakthrough and advancement, we inch closer to realizing the full potential of intelligent machines and unlocking new realms of human-machine collaboration.

But our journey doesn’t end here—it’s only just begun. As we stand at the intersection of technology and AI computer architecture, let us embrace the challenges and opportunities that lie ahead with unwavering determination and boundless curiosity.

At Verdict, we’re not just spectators in the AI revolution—we’re active participants, shaping the course of history with every search, chat, and shared result. Join us on our mission to craft a future where machine learning and artificial general intelligence evolve through real-world interactions and the insights of our community.

Each interaction on Verdict is a building block that helps us understand and grow together with you, paving the way for a more intelligent and inclusive future. We invite you to explore our platform, engage with our community, and contribute to the collective journey of developing AI that reflects our diversity, learns from our experiences, and expands with our collective knowledge.

Together, let’s embark on a collective journey to transform the future of artificial intelligence. Join us at Verdict, where the power of AI meets the wisdom of the crowd. Together, we can shape a future where intelligence knows no bounds.